I recently learned about something called the continued influence effect. I read about in a newspaper article here. This effect refers to the observation that outdated or incorrect information can continue to influence our beliefs even after it’s been corrected. From the article:

The continued influence effect manifests when we are presented with evolving information, such as with news updates around current events or with a changing scientific understanding around topics related to health. Knowing about this effect can help us understand how we’re all susceptible to the influence of outdated or problematic information, especially when trying to navigate the deluge of news and information we receive nowadays.

They give an example of MSG in Chinese food. It all started with a flawed 1968 report, and involves a set of symptoms cause by MSG including headache, throat swelling and stomach pain developing shortly after the ingestion of the food additive, used for its delicious umami flavor. Later studies have shown that MSG in normal serving size has little or no effect in the vast majority of people, yet the idea still persist that MSG is bad for you, even earning a name called the “Chinese Restaurant Syndrome.”

So why is it so difficult to update our attitudes when new information is presented? In trying to answer this question researchers conducted a study in 1994, where participants were asked to read a series of reports about a warehouse fire. From the article:

These reports included information about volatile materials, like oil paint and pressurized gas canisters, found in a closet. Later, participants were given updated information that the closet was actually empty; there were no volatile materials found. However, despite this correction, participants still relied on the original reports when making later judgments, saying, for example, the reason they thought the fire was particularly intense was that oil fires are difficult to extinguish.

This continued reliance on the corrected information was not due to failure to understand the new information; the majority of participants were able to accurately report that the closet was indeed empty when asked directly. Yet, they still relied on the retracted information in their future judgments related to the fire’s cause.

Instead, the continued influence effect can be thought of as result of how our brains store and update information. When we receive a correction about something we already know, we don’t simply erase the old information from our minds. Rather, representations of both the old information and its correction coexist in our brain’s knowledge networks, playing a role in guiding our future judgments and beliefs. We’re especially likely to rely on old information when it’s the only explanation we have, or the one that comes to mind the easiest. When searching for reasons why our stomachs hurt, the warnings of fellow Yelpers make a compelling argument when they’re the most plausible explanation we can think of.

The good news is that this tendency also lends itself to a solution: When giving a correction, also provide people with new information to take the place of the old. In the case of the warehouse fire study, researchers found that when, along with the corrections about the contents of the closet, they also shared an alternative potential cause for the fire — arson materials found elsewhere — participants relied less on the outdated information. Similarly, you might explain to people that perhaps the reason for their post-orange chicken funk isn’t the MSG, but instead that the dish they chose had a lot of oil, a culprit for many of the same symptoms.

You can see this problem happening real time in the current political climate in the USA. Musk will say that $50 million was spent on condoms for Hamas, Trump will then say it was $100 million (this all really happened), then it is debunked over and over again, but now both thoughts are living rent free in your head. The $50 million number will influence your ideas on the need for foreign aid even though you know it is false. Add vaccines cause autism, or any other number of bad science that gets stuck in our heads.

Since this is a Mormon themed blog, lets steer this towards the Church. It was said over and over that Blacks did not hold the priesthood because they were less valiant in the pre-mortal life, and that the dark skin was a curse. Then the Church comes along, first trying to gaslight you into thinking they never taught that, and then saying that it is wrong. Now you have both ideas in your head, with both influencing how you think about your fellow Black church members.

According to the article, the way to get the bad information out of your head is to offer an alternative reason for the prohibition of Blacks holding the priesthood. The way to do that is to tell the truth, that Brigham Young and many of the leaders were racist. This is too hard, so many members live with continued influence effect. The only solution for the Church that they see viable is to just wait until all the members that had the old reasons die out.

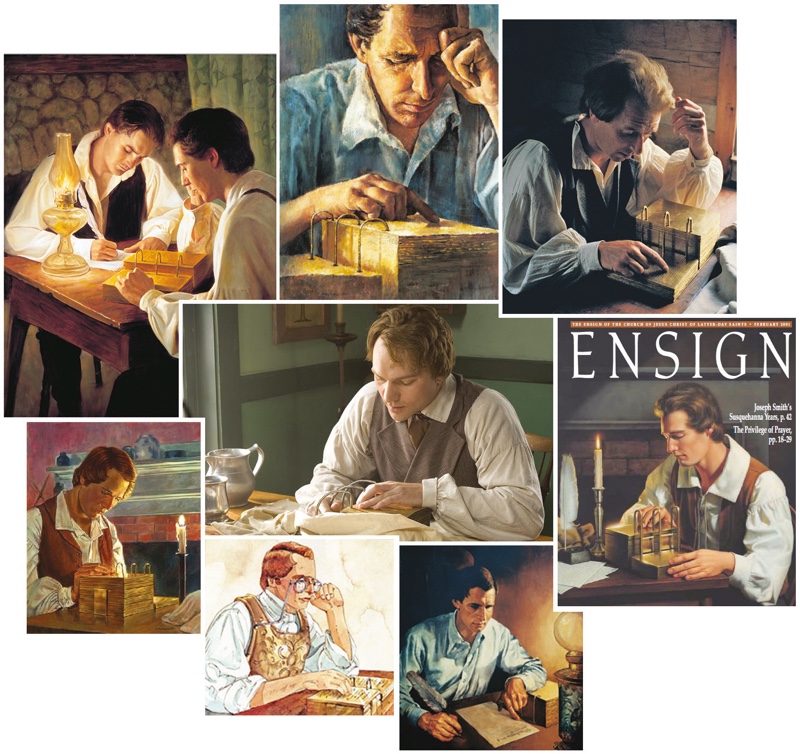

You can add any number of Church examples here. Joseph Smith translated the BofM looking through glasses called an urim and thummim, or he just looked at the pages directly and translated them. This has all been replaced by the real truth that he used a stone in a hat for all the pages that we have today as the BofM. The church even published a photo of the stone he used. But those pictures from the Ensign are still in our head, and still influencing our thoughts.

What are some examples of continued influence effect you see in the church?

I took my first CPR class around 1983. I was instructed about importance of ratio of chest compressions to breaths, 30:2. When returning for future CPR classes, there were changes and we had to memorize new ratios to pass the quizzes. By the mid-1990’s it was required to take annual CPR classes for my profession. I even attained a CPR teaching certificate. Each 5 years there would be some minor and then also some major changes to the CPR regime. Then it frankly got confusing and contraindicating, since I had already cemented my learning from the initial years. Now, I just learn the information to pass the quiz, and purposefully forget it walking out the door.

Now the governing bodies admit, “Most of the CPR guidelines are based on a very low-quality body of evidence. Although millions of cardiac arrests happen each year globally, high-quality clinical research on resuscitation is very difficult to conduct, due in part to challenges in obtaining consent and randomization.”

From the LDS context, I went to the temple for the first time in 1989. Yes, the ceremony with the violent penalties. I was instructed to learn “word for word and replicate with exactness for my own salvation.” This was cemented by attending 1x/week for the 9 weeks in Provo MTC. We never attended the temple during my mission. Then when I returned home, I went to the temple. In a period of only 2 years, the whole parts, that were essential for my salvation, were no longer in the ceremony. We all know the history of the ongoing changes with the temple.

When I still frequented the temple, The continued influence effect was in action. I would still think in my mind of the entire execution of the penalty, instead of performing only the 1/4 action the church had truncated it to. When in the prayer circle I would think in my mind the words of the 3 word chant, we were originally taught. Since 2005 there have been ongoing changes. How many of us still started to stand, although it was eliminated? How many of us remember/have flash backs with the embarrassment of walking in the temple with an open, body exposing, shield? How many of us tried the 5 points of fellowship at the veil?

Now, the CPR is being changed to CCR (Cardio-cerebral Resuscitation). This is revolutionary. They recognize the limitations of the past instruction and looking to improve for the next generation. However, if I had to perform CPR in a true emergency, I may instinctively go back to what I learned in the 1980’s. ( I fortunately never had to perform CPR and hope someone more experienced is near if needed).

I have not attended the temple since 2019. I read online that there is no longer a witness couple, Adam and Eve actually dress in the temple clothes in the film/drawing, no touching with tokens until the veil, and a informed consent of 10 minutes, prior to the eternal covenants. That is not the temple ceremony I learned and was admonished to follow. If I were to attend the temple today, would I out of habit replicate words and actions I learned initially? Would I be admonished for doing it “wrong”? For those still attending the temple, how often do you revert to or think about to the prior taught actions?

Faith,

I hear what you’re saying about the temple changes. Things are changing so fast nowadays, and sometimes so subtly, that rather than mentally supply the missing elements from the pre-1990’s Endowment, I find myself hearing something and thinking, “Hey, is that new or did I just miss it last time?” The 1975 Endowment is still in my mind somewhere, but the firehose of recent changes, largely made possible by the new slideshow format, makes it easier to treat it as a relic of my past.

There is a long list of things that I was taught, but have since been quietly altered.

To build off the “translation” of the B of M, I can add that I was vehemently taught that Native Americans were the descendants of the people of Nephi. Turns out that’s not true and now the cover page says they’re “among the ancestors”. Along with the B of M, we have the book of Abraham, which we were told was the translation of Egyptian scrolls. Turns out that’s not true, now it’s just a religious text. Despite the change of narrative, I think most members still believe the original teachings.

The church enjoyed a period of positive perception with the “I’m a Mormon” campaign. Now that’s considered a slur. Those of us who aren’t TBM, still call members “Mormon”.

We have the popular topic of polygamy. God sometimes commands it, but it’s abhorrent in His eyes. It isn’t currently practiced, but actually it is in the eternal sense, as men continue to be sealed to multiple women. The only difference today is they’re not all alive at the same time. A bit of a contradiction on this one. Members will say the church doesn’t practice it, but are aware it still is practiced, so I’m not sure how they justify that one.

The church used to actively teach that if you’re faithful/righteous/etc you will get your own planet with your eternal mate. They deny that now, but people definitely still believe.

There are more, but I’ll leave some for others to share.

For the record, I never wanted my own planet. Maybe a nice garden plot of a half acre or so. An arts and crafts house with stained glass windows and a nice library. One wife who is the love of my life. A few spare rooms so the grandkids can come and stay when life gets a little much. But a planet? A man needs to know his limitations.

I think the church makes all of this worse by ever telling us the “new information” they received or why they made the change. For example, with the blacks and priesthood, it was actually years before they officially even disavowed the “less valiant” reason and they never gave an explanation for why blacks went so long being denied priesthood. So, years later, they still have Randy Bott teaching at BYU that blacks were not given priesthood with the only explanation ever given, even if the church had said that was not why. It was still the only explanation ever given because they are too “infallible” to admit that Brigham Young was racist. And with the temple changes, the old wording made me feel like I was not a daughter of God, only the wife of his son. But they changed the wording without apology or explanation and I still FEEL like the old wording is true, that I am not a child of God, just the unloved wife of a beloved son.

Without the corrected information, it is even harder to change what one thinks. But the church doesn’t apologize or explain, so I still feel that Mormon God doesn’t love his daughters and why would I worship such a god?

I think of the word “Mormon” and how it was used by the enemies of the church to call the members of the church. Then it became embraced by the members and became a point of honor and community. Now because of a new revelation by the prophet, it’s a name of derision again except it the members pointing fingers at members for calling themselves Mormon. It also happens to be the same prophet that said the Book of Mormon was translated by looking into a hat. Now wonder so many didn’t believe him when it came to masks or vaccines. Don’t those people look at themselves as the most righteous of us all?

in: we are traditional Chrisitians

out: we don’t wear crosses nor do we follow traditional Christmas traditions

in: members of the COJCOLDS

out: Mormons

in: you’ve already made covenants

out: agency

in: temporary commandments

out: commandments

in: the ongoing restoration

out: the restored Gospel

in: stake presidency to conduct councils of love

out: High Council to participate in discipline council

in: sleeveless garments

out: the concept of porn shoulders

in: business casual and pants for women

out: exclusively FBI suites and pioneer dresses

in: YM and YW programs totally unstructured

out: BOY Scouts and YM/YW presidencies

in: temple movie

out: temple slide show

in: full-time missionaries call home weekly

out: talking to family during full time mission is an unnecessary distraction

in: if you act gay you are sinning

out: if you are gay you are sinning

in: BOM and BOA were inspired

out: BOM and BOA were translated

in: Diet Coke, Coke, etc at BYU

out: “there is no demand”

I don’t know. Something called the “Continued Influence Effect” makes it sound like either some sort of bias or perhaps an unwillingness or inability for an individual to change.

To me the changes seem more like gaslighting.

America is the leader of the free world. Trump just abdicated this role. 3 times last week he sided with Russia against Ukraine. The rest of the free world voted to help Ukraine, and after Valinskys was bullied by trump in public, he then met with European leaders who supported him. These European leaders, contrary to trump lies, are already supplying more aid than America, and will continue to.

Trump also claims America has sent $350 million in aid and wants some compensation. The amount the state department says is 59 million since 2014 when Russia invaded Crimea, and that American money will be missed.

Please look up the Budapest Memorndum. It is signed by Russia, America, UK, France, and Ukraine in 1994. Ukraine at the time had the third largest stockpile of nuclear weapons and agreed to give them up in return for the other signatories agreeing to defend Ukraine’s borders and sovereignty. Russia and now America has now reneged.

America is the land of the free. You just joined the Autocrats without protest. In Greece there is mass protest, and a national strike, because the government has not responded appropriately to a train crash that killed 69 people. At what point does America protest?

American institutions, are strong enough to survive Trump. He ignores the rule of law, and the electoral law. Why would you think he will not destroy them?

I’m afraid the Church is irrelevant, 2/3 of members, and presumably leaders voted for Trump, which indicates a moral vacuum. What has religion to recommend it if it no longer has morality?

Bill, you might be interested. We have a cyclone off the coast, and expected to come ashore in the next couple of days. We have not had a cyclone this far south, but Ocean temperatures are high at 27c.

17 meter waves have been recorded. Surfers are out.

Ah yes, zombie ideas. I still hear people saying that polygamy was created by Brigham Young to help widows whose husbands died from persecution/crossing the plains.

A cruel irony of how we live in the age of information and yet zombie ideas flourish. Goes to show the power of confirmation bias.

Geoff, maybe insightful, maybe correct, but maybe also off-topic?

We have the saying that nature abhors a vacuum, and same for our minds. When we don’t know something, people often create something to fill the hole. What we do matters, but to some extent what we did formerly also matters. Sometimes we try something, it works more or less until it doesn’t work anymore, and we either stay the course or make a change. It is normal for crew to ask the captain (a) why did we make the original decision, and (b) why did we make the course correction. The captain can provide an answer, such as winds were blowing us off course, or he can tell the crew to go away as it isn’t their place to question the captain.

The official LDS church narrative is a continual influence effect. It all starts with the narrative of the “First Vision”. It is now well documented that the 1820 First Vision story (a) was not important in the founding of the LDS church (b) is not historically correct (c) is a revised story that varies greatly from earlier versions and (d) demands claims of theological certainty that simply are not demonstrated.

I personally love the canonized First Vision story as a story. But the importance of the story to the LDS church has always puzzled me – and to the modern leadership the story is the most important thing of the Restoration. Per the canonized story nothing important happened after the First Vision except some people were said to have criticized Joseph Smith for claiming it. At best and per Joseph Smith’s own words, Joseph Smith had a poignant spiritual experience and some people doubted him. And with that poignant spiritual experience, Joseph Smith spent the next several years being a teenager. And per actual history, the Smith family still went and joined the Presbyterian church – apparently they were not on pins and needles waiting for Joseph Smith to have another visit from God.

So why does the LDS church leadership pin everything on the First Vision? Tangible proof and test of Joseph Smith’s prophetic call is The Book of Mormon. Why not focus on that? Why lean on a “vision” that cannot be proven and has been shown to be dramatized history?

I don’t know. What I observe is every generation of LDS leadership reinforces the First Vision narrative and they do so independent of evidence. We see this pattern in the church essays on the First Vision where it is acknowledged that multiple First Vision stories exist and conflict. The contradictions in the story versions are simply dismissed as trivial. In reality, the contradictions are significant and point to a story being manipulated over time to achieve a different effect. But can the LDS church admit this manipulation? Apparently not.

I’m pretty sure my parents still believe the church is against contraception.

The list is long, and others have enumerated many examples. I would guess that the phenomenon is unusually strong in the church because the leadership are so allergic to ever contradicting anything said by their predecessors, or openly acknowledging change. But old ideas definitely stick around in people’s minds. Waiting for the ideas to die off with the generation that was taught them makes for a very slow pace of change.

Church leaders also preach question-shaming.

Consider Robert Millet telling missionaries at the MTC a number of years back to answer the questions people should have asked.

Or consider Brad Wilcox telling youth that they’re asking the wrong question in asking why blacks couldn’t have the priesthood until 1978:

“‘why did the Blacks have to wait until 1978,’ maybe what we should be asking is, ‘why did the whites and other races have to wait until 1829?”

Couldn’t’ve said it better myself, A Disciple.

As I’ve prioritized source documents in my study of Church history, it’s become increasingly clear that—whether reducible mostly to profit motive or not—the Church was founded as a vehicle for promoting the Book of Mormon. One Redditor said it best: there’s a good chance JS shopped the BoM to some local congregations and, disappointed in the response, decided to start his own church. Chalk it up to the Continued Influence Effect, or bolstering authority claims, but the interpretations don’t add up.

(Please excuse the improper attribution!)

The illustration above about the fire is perfect! Rather than fully correcting the record, the church just stops teaching things. And as illustrated in the fire example, without the full picture being presented, the members fill in the gaps with the original messaging. The Church’s position is to simply stop talking about things without correcting the record. This may be a bug of the way the church communicates, but personally I think it’s a feature.

This seems to be the MO of the current US executive branch. Bombard people with so much information, lie if you have to about immigrants eating pets and government workers being lazy and Ukraine starting a war in their own country with Russia, and the seed it planted. There is no time in the fast paced 24 hour news cycle to ever correct the arguments. Because tomorrow is another day filled with new stories about finding 20 hundred gazillion dollars wasted on tampons mailed to the Martians and eleventy-hundred kazillion dollars of social security checks sent to the Democrats dead pets.

The easy answer would be for people to stop being so gullible. PT Barnum knew what he was talking about.

A consistent theme among people making accusations of gaslighting is a failure to point to specific instances. That’s understandable when discussing gaslighting in its more formal sense—individuals engaging in this kind of behavior typically don’t write it down. It’s less understandable when it comes to institutions, which, by their nature, communicate via publications, which leave a paper trail.